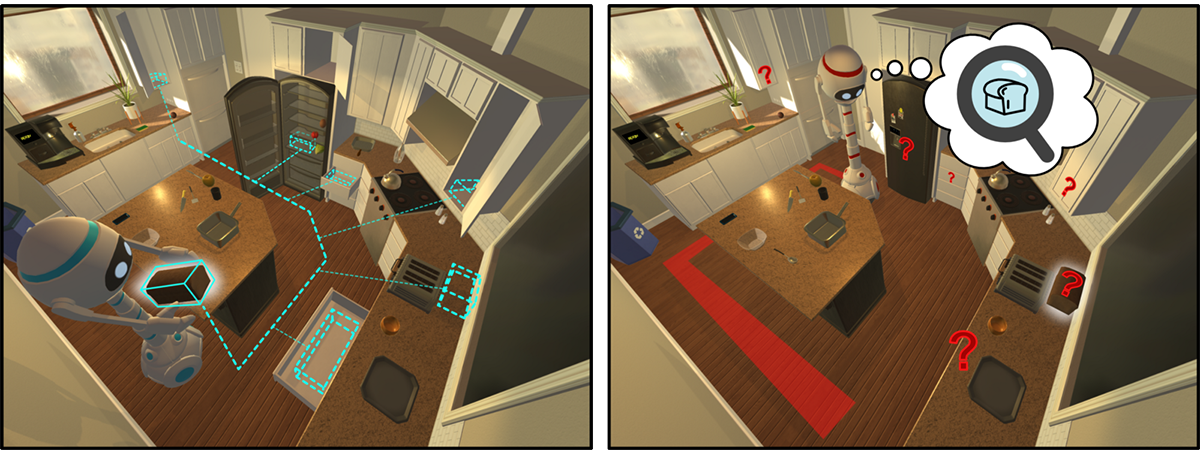

In this work we study how interaction and play can be used as a new paradigm for training AI agents to understand their world. Instead of learning from huge, static, datasets of manually labeled images, our agents learn by playing Cache, a variant of hide-and-seek where the agents hide objects for other agents to find. After having learned to play Cache, we study how our agents have learned to represent both individual images (the classical domain of computer vision research) and, more holistically, their dynamic changing environment. Notably, through playing Cache, our agents develop sophisticated single-image representations (sometimes even surpassing gold-standard supervised methods) and even begin to develop select cognitive primitives, such as object permanence, studied by developmental psychologists.

See our blog post for a high-level overview of our main results.

|

|

|

|

|

| Luca Weihs | Aniruddha Kembhavi | Kiana Ehsani | Sarah M Pratt | Winson Han |

|

|

|

|

|

| Alvaro Herrasti | Eric Kolve | Dustin Schwenk | Roozbeh Mottaghi | Ali Farhadi |

|

|

|

|

|

| Luca Weihs | Aniruddha Kembhavi | Kiana Ehsani | Sarah M Pratt | Winson Han |

|

|

|

|

|

| Alvaro Herrasti | Eric Kolve | Dustin Schwenk | Roozbeh Mottaghi | Ali Farhadi |