Embodied agents require a general representation of objects in a scene to operate within household environments. Towards this end, we employ a contrastive loss to represent relationships between pairs of objects as features. We show how the resulting representation can be used downstream for visual room rearrangement, an interactive task in AI2-THOR, without any additional training. We additionally probe the representation to evaluate if it implicitly encodes interpretable relationships between objects (e.g., a cup on top of a table). We also evaluate the ability to retrieve 3D room layouts against hard negative images, which look visually similar. Finally we explore applications of our features for object tracking in YCB-Video, a real world dataset.

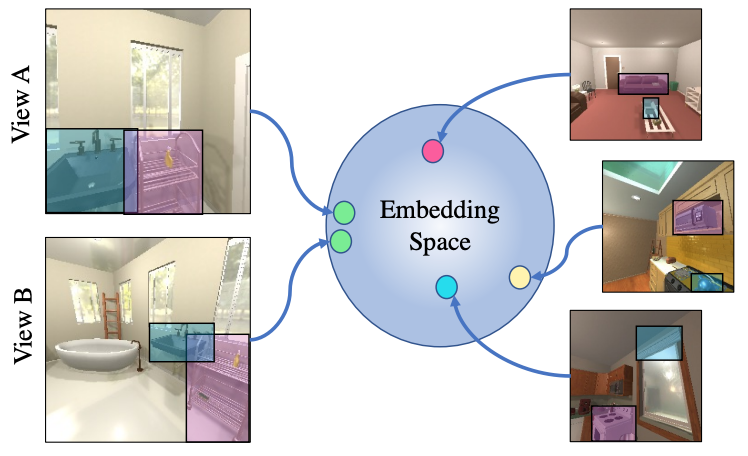

A contrastive loss encourages the same relationship observed from different views to be close in feature space and far from other relationships. Such a representation allows us to detect when objects have moved in a scene (i.e., the underlying spatial relationship changes). This information is critical for the downstream task of visual room rearrangement.