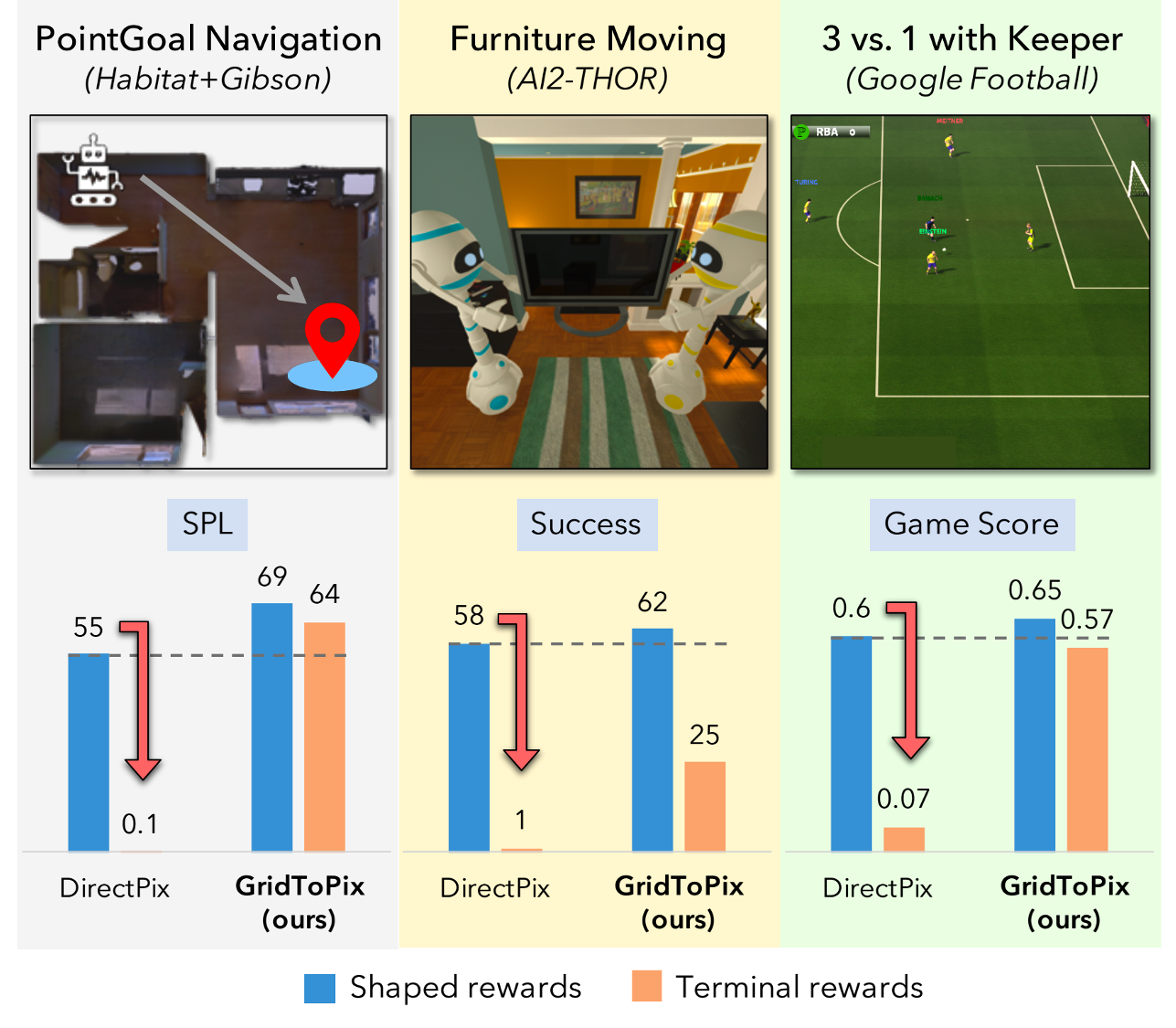

While deep reinforcement learning (RL) promises freedom from hand-labeled data, great successes, especially for Embodied AI, require significant work to create supervision via carefully shaped rewards. Indeed, without shaped rewards, i.e., with only terminal rewards, present-day Embodied AI results degrade significantly across Embodied AI problems from single-agent Habitat-based PointGoal Navigation and two-agent AI2-THOR-based Furniture Moving to three-agent Google Football-based 3 vs. 1 with Keeper. As training from shaped rewards doesn’t scale to more realistic tasks, the community needs to improve the success of training with terminal rewards. For this we propose GridToPix: 1) train agents with terminal rewards in gridworlds that generically mirror Embodied AI environments, i.e., they are independent of the task; 2) distill the learned policy into agents that reside in complex visual worlds. Despite learning from only terminal rewards with identical models and RL algorithms, GridToPix significantly improves results across the three diverse tasks.

We consider a variety of challenging tasks in three diverse simulators – single-agent PointGoal Navigation in Habitat (SPL drops from 55 to 0), two-agent Furniture Moving in AI2-THOR (success drops from 58% to 1%), and three-agent 3 vs. 1 with Keeper in the Google Research Football Environment (game score drops from 0.6 to 0.1). Given only terminal rewards, we find that performance of these modern methods degrades drastically, as summarized above. Often, no meaningful policy is learned despite training for millions of steps. These results are eye-opening and a reminder that we are still ways off in our pursuit of embodied agents that can learn skills with minimal supervision, i.e., supervision in the form of only terminal rewards.