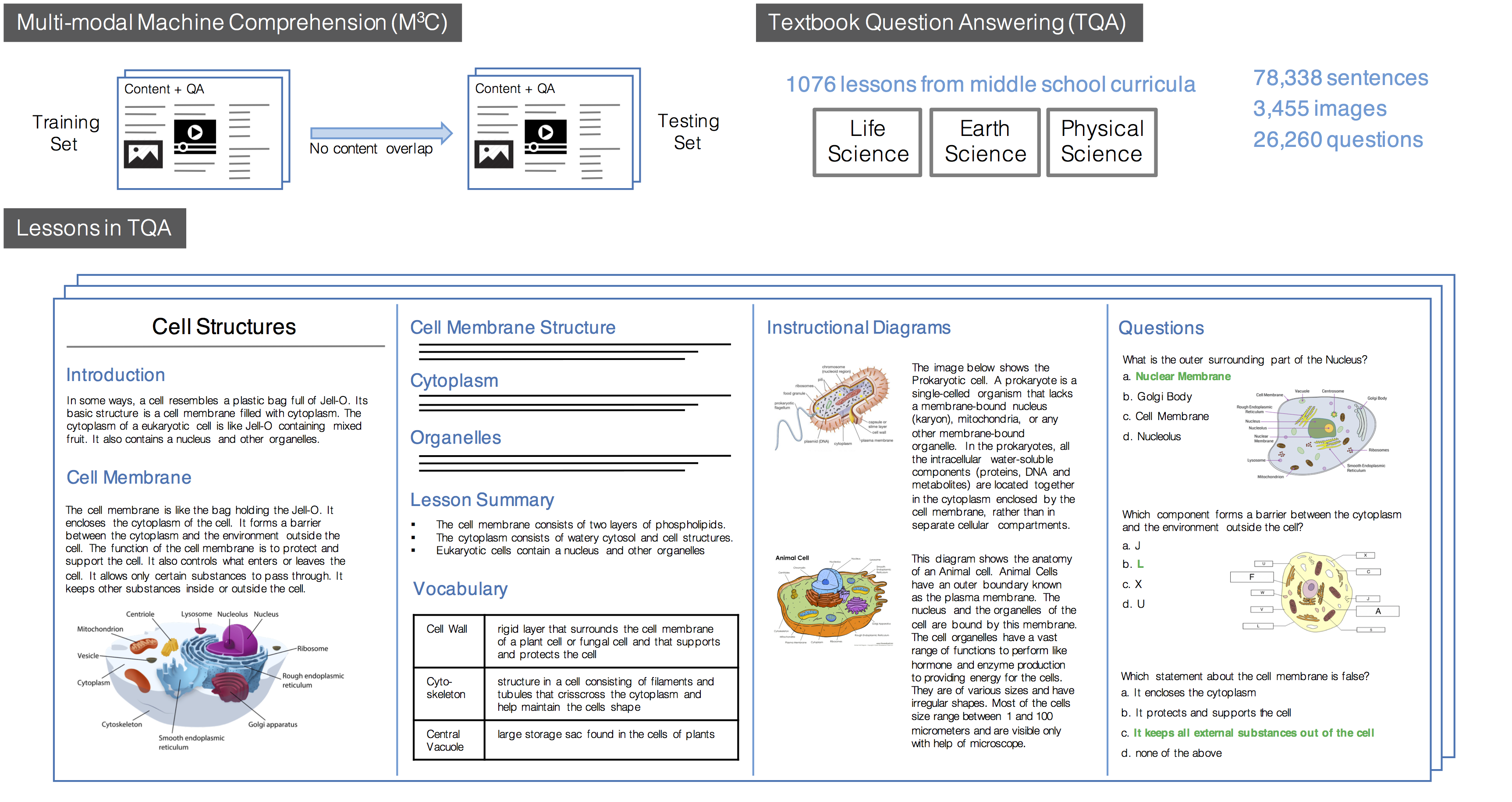

The TQA dataset encourages work on the task of Multi-Modal Machine Comprehension (M3C) task. The M3C task builds on the popular Visual Question Answering (VQA) and Machine Comprehension (MC) paradigms by framing question answering as a machine comprehension task, where the context needed to answer questions is provided and composed of both text and images. The dataset constructed to showcase this task has been built from a middle school science curriculum that pairs a given question to a limited span of knowledge needed to answer it.

TQA was constructed in large part from material freely available as part of ck12's (http://www.ck12.org) open-source science curriculum. It is distributed under a Creative Commons Attribution – Non-Commerical 3.0 license.

Instructional MaterialLessons in TQA each have a limited amount of multimodal material that contains the information needed to answer that lesson's text and diagram questions. Textual material is broken down by subtopic within the lesson. Figures and instructional diagrams are linked from the topic in which they appear.

QuestionsTQA is split into a training, validation and test set at lesson level. The training set consists of 666 lessons and 15,154 questions, the validation set On Care has been taken to group these lessons before splitting the data, so as to minimize the concept overlap between splits.

Questions are separated by type in the dataset (nested under diagramQuestions and nonDiagramQuestions), and are also distinguished by global id prefixes indicating an associated diagram (DQ_ and NDQ_).

ImagesImages are divided into four types: